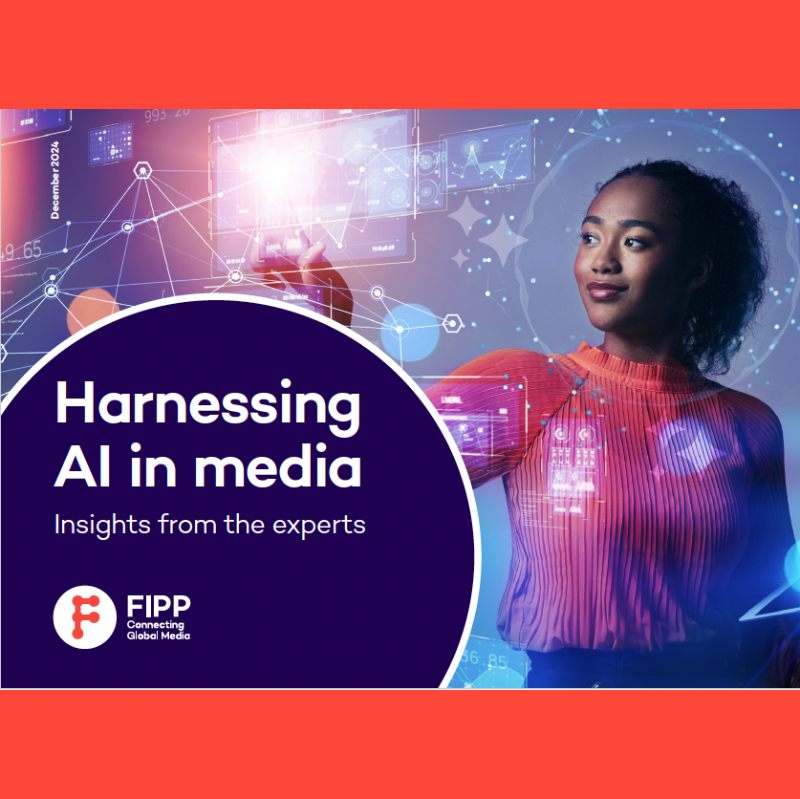

[New] FIPP’s Media AI principles Tracker

Artificial Intelligence poses big opportunities but also an existential challenge to publishers and related industries. With that in mind many media groups, tech giants and other organisations have drawn up new principles to dictate how AI will be integrated into their operations. This ever-evolving page will list these guidelines, as well as statements from companies, as industries continue to adapt to the rapid growth of new technologies.

Does your media (or media related) company have a set of AI principles that should be featured in this list? Reach out to Sylkia, Editor at FIPP.com.

Statement

While we are optimistic about the potential of AI, we recognise that advanced technologies can raise important challenges that must be addressed clearly, thoughtfully, and affirmatively. These AI Principles describe our commitment to developing technology responsibly and work to establish specific application areas we will not pursue.

Principles

- Be socially beneficial

- Avoid creating or reinforcing unfair bias

- Be built and tested for safety

- Be accountable to people

- Incorporate privacy design principles

- Uphold high standards of scientific excellence

- Be made available for uses that accord with these principles

Ringier

Statement

A conscious approach to the possibilities of artificial intelligence (AI) is highly relevant, especially for a media company. That is why Ringier has decided to introduce guidelines for the use of artificial intelligence for all companies in the Group – which is represented in 19 countries. These will be continuously reviewed in the coming months and adjusted if necessary.

“We are convinced that through responsible interaction between humans and machines, based on clear AI rules, our products and processes can be further improved. By introducing these rules, we are taking on international responsibility to leverage the positive aspects of AI while minimising the risks. In this way, Ringier ensures that AI is used transparently and in line with its values.” – Ringier CEO Marc Walder

Ringier guidelines for dealing with AI

- The results generated by AI tools are always to be critically scrutinised and the information is to be verified, checked and supplemented using the company’s own judgment and expertise.

- As a general rule, content generated by AI tools shall be labeled. Labeling is not required in cases where an AI tool is used only as an aid.

- Our employees are not permitted to enter confidential information, trade secrets or personal data of journalistic sources, employees, customers or business partners or other natural persons into an AI tool.

- Development codes will only be entered into an AI tool if the code neither constitutes a trade secret nor belongs to third parties and if no copyrights are violated, including open source guidelines.

- The AI tools and technologies developed, integrated or used by Ringier shall always be fair, impartial and non-discriminatory. For this reason, Ringier’s own AI tools, technologies and integrations are subject to regular review.

The BBC

Statement

The BBC has published a Machine Learning Engine Principles (MLEP) framework, which comprises six guiding principles and a self-audit checklist for ML teams (engineers, data scientists, product managers etc). Grounded in public service values and designed to be practical, we hope the BBC’s MLEP framework is a helpful contribution to the development of responsible and trustworthy AI & ML.

The Principles

- Reflecting the BBC’s values: The BBC’s ML engines will reflect the values of our organisation; upholding trust, putting audiences at the heart of everything we do, celebrating diversity, delivering quality and value for money and boosting creativity.

- Our audiences: Our audiences create the data which fuels some of the BBC’s ML engines, alongside BBC data. We hold audience-created data on their behalf and use it to improve their experiences with the BBC.

- Clear explanations: Audiences have a right to know what we are doing with their data. We will explain, in plain English, what data we collect and how this is being used, for example in personalisation and recommendations.

- Editorial values and broadening horizons: Where ML engines surface content, outcomes are compliant with the BBC’s editorial values (and where relevant as set out in our editorial guidelines). We will also seek to broaden, rather than narrow, our audience’s horizons.

- Taking responsibility: Review, Security and Fairness: The BBC takes full responsibility for the functioning of our ML engines (in house and third party). Through regular documentation, monitoring and review, we will ensure that data is handled securely. And that our algorithms serve our audiences equally & fairly, so that the full breadth of the BBC is available to everyone.

- Human in the loop: ML is an evolving set of technologies, where the BBC continues to innovate and experiment. Algorithms form only part of the content discovery process for our audiences, and sit alongside (human) editorial curation.

Thomson Reuters

Statement

Thomson Reuters will adopt the following Data and AI Ethics Principles to promote trustworthiness in our continuous design, development, and deployment of artificial intelligence (“AI”) and our use of data.

We believe these Data and AI Ethics Principles will provide our colleagues and partners with the right foundations to build trustworthy, practical, and beneficial AI for our customers. These Data and AI Ethics Principles will evolve as the related industries continue to mature.

Principles

- That Thomson Reuters use of data and AI are informed by our Trust Principles.

- That Thomson Reuters will strive to partner with individuals and organizations who share similar ethical approaches to our own regarding the use of data, content, and AI.

- That Thomson Reuters will prioritise security and privacy in our use of data and throughout the design, development and deployment of our data and AI products and services.

- That Thomson Reuters will strive to maintain meaningful human involvement, and design, develop and deploy AI products and services and use data in a manner that treats people fairly.

- That Thomson Reuters aims to use data and to design, develop and deploy AI products and services that are reliable, consistent and empower socially responsible decisions.

- That Thomson Reuters will implement and maintain appropriate accountability measures for our use of data and our AI products and services.

- That Thomson Reuters will implement practices intended to make the use of data and AI in our products and services understandable.

- Thomson Reuters will use employee data to ensure a safe and inclusive work environment and to ensure employee compliance with regulations and company policies.

Microsoft

Statement

When we embarked on our effort to operationalise Microsoft’s six AI principles, we knew there was a policy gap. Laws and norms had not caught up with AI’s unique risks or society’s needs. Yet, our product development teams needed concrete and actionable guidance as to what our principles meant and how they could uphold them.

We leveraged the expertise on our research, policy, and engineering teams to develop guidance on how to fill that gap. The Responsible AI Standard is the product of a multi-year effort to define product development requirements for responsible AI. We are making available this second version of the Responsible AI Standard to share what we have learned, invite feedback from others, and contribute to the discussion about building better norms and practices around AI.

While our Standard is an important step in Microsoft’s responsible AI journey, it is just one step. As we make progress with implementation, we expect to encounter challenges that require us to pause, reflect, and adjust. Our Standard will remain a living document, evolving to address new research, technologies, laws, and learnings from within and outside the company. There is a rich and active global dialog about how to create principled and actionable norms to ensure organisations develop and deploy AI responsibly. We have benefited from this discussion and will continue to contribute to it.

We believe that industry, academia, civil society, and government need to collaborate to advance the state-of-the-art and learn from one another. Together, we need to answer open research questions, close measurement gaps, and design new practices, patterns, resources, and tools.

Principles

The company’s commitment to Responsible AI is founded on six core principles: fairness, inclusiveness, safety and reliability, transparency, accountability, and security. For more information click here.