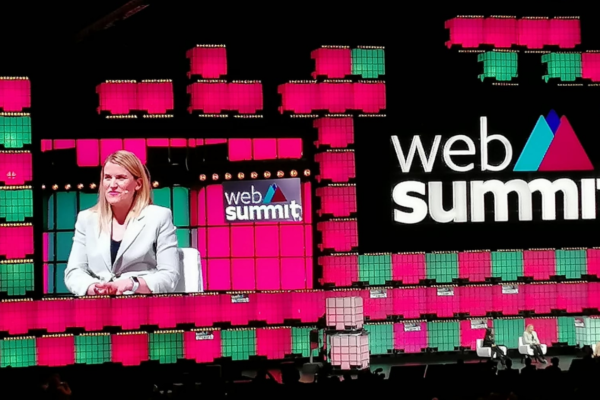

“I think Facebook would be stronger with a CEO who’s willing to focus on safety”: whistleblower Frances Haugen opens Web Summit in Lisbon

Frances Haugen, the Facebook whistleblower whose decision to leak internal documents has led to some of the most intense scrutiny ever experienced by the social media giant, tonight suggested Mark Zuckerberg should step aside so that the company can be led by someone “who’s willing to focus on safety” as she officially opened Web Summit in the Portuguese capital of Lisbon.

In her first appearance on a public stage, Haugen called for Facebook to urgently prioritise public safety over profits, focus on solutions and stop framing the debate about how it handles data and content as a “false choice between censorship and freedom of speech”.

The decision to speak out

Haugen left her post as product manager at Facebook earlier this year and took thousands of internal documents with her, which became the basis for a series of Wall Street Journal investigations into the company. Haugen came forward to waive her anonymity after being advised that it was the best way to achieve maximum impact. “I was being asked to think about what the future looked like,” she explained on stage. “I was told that if I wanted to have the impact I desired, I would have to come out.”

Her revelations have highlighted the role that Facebook plays in such troubling issues as ethnic violence, poor mental health of teenagers, increased polarisation of opinion and the spread of misinformation. Her criticism also applies to other Facebook-owned apps, including WhatsApp, Instagram and Messenger, which are used by 2.8 billion people every day.

While many of these criticisms have been levelled at Facebook before, Haugen’s revelations from inside the company have only added to a growing sense that Facebook is unwilling or unable to do what is necessary to curb the worst consequences of its enormous global reach.

The human cost of profit-seeking

Haugen described feeling a little overwhelmed by all the attention. “I never wanted to be the centre of attention. I’ve never liked it,” she said. “I’m just glad to have the opportunity to bring this to light and grateful that people have taken seriously the evidence I’ve disclosed – it represents a pattern of behaviour that has prioritised profit over people.”

Speaking about her decision to leak the documents by working with the WSJ and charity Whistleblower Aid – whose CEO Libby Liu joined Haugen on stage – Haugen said: “There’s always a trade-off between what you can bring out with you and when you leave. How long can you sit with knowledge, and how can you have maximum impact with what you know? I came to the conclusion that I couldn’t responsibly sit with what I knew any longer.”

After leaving her job at Facebook, Haugen had “a crisis of confidence” while living with her mother, who is an episcopal priest. “I interrogated my own logic and became confident that I wasn’t deluding myself,” she said. “My mother helped me to follow my conscience.”

Non-content-based solutions do exist, and are effective, for making Facebook safer.

“Facebook is full of good people”

Haugen emphasised that she wasn’t out to paint Facebook as a company beyond redemption. “There are a lot of kind, smart, conscientious people inside Facebook,” she said. “One of the human costs of Facebook’s institutional decision-making is that people like me, we come in wanting to work on misinformation and improve things. But then we learn things that make us realise that people’s lives are being put at risk.”

She added that she felt that the WSJ was a really good institution to work with for the first rollout of the leaks. “There was a risk that all of this material could be sensationalist – we’re talking about genocide, human trafficking. Rather than making Facebook look inherently bad, I wanted people to understand the incentives and dynamics that have led good people who work there in this direction.”

She also knew she wanted non-English-speaking journalists to have access to the documents in some way. “We worked with the press outside of the US. That’s why we had rings of expansion in this process.”

Facebook’s destructive algorithm and the danger of engagement-based ranking

Haugen zeroed in on Facebook’s algorithm as the main driver of many of the issues she has drawn to the public’s attention. “The algorithm works by prioritising and amplifying the most polarising and divisive content,” she explained.

While bad enough in places like the US and western Europe, which have strong democratic institutions, “in more fragile places in the world, it stokes division and conflict – places like Ethiopia, which is currently experiencing massive internal conflict.” Ethiopia has more than 100m people, with five official languages and dozens more spoken across the population. “And Facebook in these places doesn’t have even the most basic safety systems in place, such as AI to remove the most dangerous content, because you have to roll them out language by language,” said Haugen. This is a fatal flaw in the foundations of its security system, she said.

Bad people posting bad things are not the issue – it’s about who gets the largest megaphone.

“A false choice between censorship and free speech”

The single most important thing to take away from her revelations, Haugen said, is that Facebook is “trying to reduce everything down to a false choice: do you want censorship or free speech?” Yet this is just sidestepping the fact that “non-content-based solutions do exist, and are effective, for making Facebook safer,” added Haugen. “These would work by making the platform smaller, slower.

“Bad people posting bad things are not the issue – it’s about who gets the largest megaphone. Facebook is reliant on these huge groups [of users], and it has tied its hands with those groups. Engagement based ranking is dangerous, because the most extreme posts are the ones that get the largest audience. It is only this tiny amount of content that drives most of Facebook’s integrity problems.”

Another false choice continually propagated by Facebook is between privacy and data, Haugen added. “But there are a lot of ways that data can be released, such as in an aggregated way, that don’t violate people’s privacy,” she said, pointing out that other tech companies are much better at being transparent about data. “I think there needs to be mandated release of data. We need mandated, accountable transparency. We need 10,000 eyes looking at Facebook and how it uses data.”

An issue of organisational structure

Haugen’s background as a data scientist with an MBA makes her quite unusual in Silicon Valley. “My education emphasised that technologists also need to be well rounded in the humanities,” she said. “I’m a big believer in studying organisational structures, and part of the problem with Facebook is that the group of people that’s responsible for reporting to politicians is the same as the branch that makes decisions about safe content. That’s different to Twitter, for example.

“Part of the reason I could make these criticisms is because Facebook is the fourth social network I’ve worked at. Things are substantially worse at Facebook than the other social networks I’ve seen.”

Asked about the allegation that she cherry-picked the documents to release, Haugen responded: “There’s a very simple solution to that: they can release more documents. Google and Twitter are far more transparent than Facebook. In fact, the Twitter firehose catches a lot of Facebook’s problem posts.

“I believe in the power of archives, of documentation. Every human being deserves the dignity of the truth – that’s what my mother says. I am doing this in the hope that we can make decisions for the public good.”

“Facebook has a Meta problem”

Last week’s big story was Mark Zuckerberg’s announcement that Facebook’s parent company would be rebranded as Meta, in an attempt to put distance between the revelations and the company. “There’s a Meta problem at Facebook,” said Haugen. This problem is that Facebook continually chooses expansion over solving basic safety systems where it already operates. “It’s unconscionable,” she said.

It is only a tiny amount of content that drives most of Facebook’s integrity problems.

Asked if Zuckerberg should step down, Haugen responded carefully: “At a minimum, the shareholders should have the right to choose their own CEO. I hope that [Zuckerberg] sees that there’s so much good he could do in the world. I think Facebook would be stronger with someone who’s willing to focus on safety … so yes.”

Before officially opening Web Summit, Haugen made perhaps a more direct appeal to the audience, which comprises many people working within the tech industry. “I still have faith that Facebook can change for the better,” she remarked. “I look forward to seeing it in five years’ time.”

She emphasised: “It doesn’t make [Zuckerberg] a bad person if he makes mistakes. But it is unacceptable to continue to make the same mistakes over and over again.”

Haugen was preceded at Web Summit’s opening night by Black Lives Matter co-founder Ayọ Tometi, who spoke about the movement’s web-based origins and keeping human values at the centre of technological advancement.

Web Summit is Europe’s most well-attended and highly regarded tech conference, and has been on a two-year hiatus due to the pandemic. It is expected to draw 40,000 attendees this week. Follow FIPP on Twitter for our live updates from Lisbon.