Can machine learning detect “fake news”?

Fake news is nothing new. The Roman Emperor Augustus led a campaign of misinformation against Mark Antony, a rival politician and general. The KGB used disinformation throughout the Cold War to enhance its political standing. Today fake news continues to serve as a political tool around the world, and new technologies are enabling individuals to propagate that fake news at unprecedented rates.

One of those new developments, artificial intelligence (AI), can help journalists build a consistent fake news detector, but AI can also empower others to disseminate and even create new forms of misinformation. To understand how, we need to take a quick detour and explain machine learning — one of the most important sub-domains of artificial intelligence

Detecting fake news with machine learning

Machine learning is, in the most basic sense, a system that learns from its actions and makes decisions accordingly, and it relies in turn on a process called deep learning which breaks down a single complex idea into a series of smaller, more approachable tasks.

Thus, conceptually, machine learning can help detect fake news! An intelligent system that takes news stories as its input and a big ol’ ‘Fake’ or ‘Not fake’ sticker as output.

Machine learning (and deep-learning) relies mostly on algorithms, a set of rules that when followed leads to a desired output. But constructing algorithms is exceptionally difficult and the results can be catastrophic, especially when we rely on them to determine what news stories should be broadcast to our readers.

Algorithms make mistakes

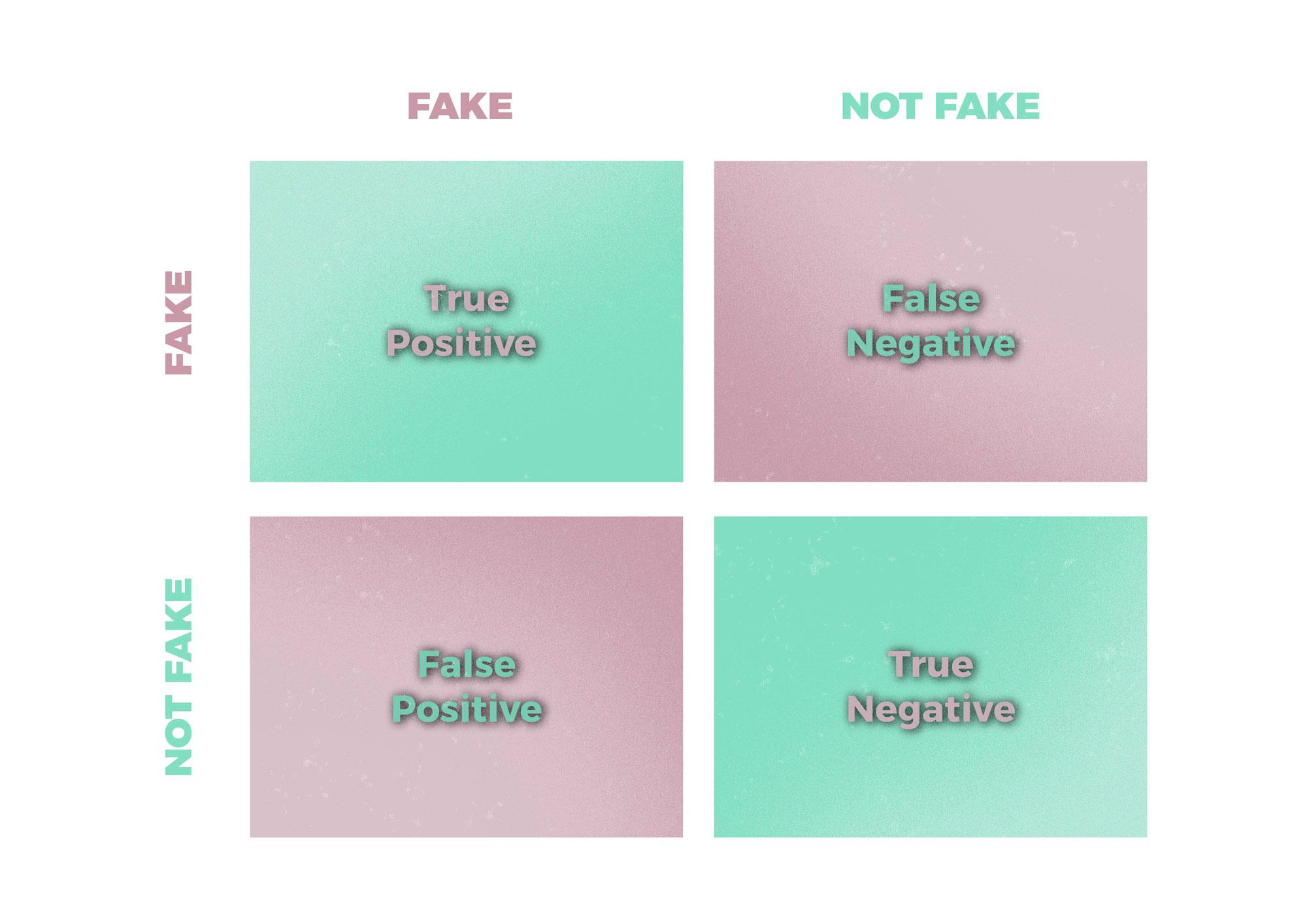

The two most common errors in this sort of machine learning are terms that we borrow from statisticians — Type I (false negative) and Type II (false positive) errors.

A false negative would mean that your machine labels a fake news item as not fake. We don’t want that.

A false positive means your machine labels a real news story as fake. We don’t want that, either.

What we want is a system that can, with a high level of accuracy, label fake news as being fake, and real stories as not being fake. Again, we ask: How?

We use news data to teach our algorithm.

Using news articles to train the AI

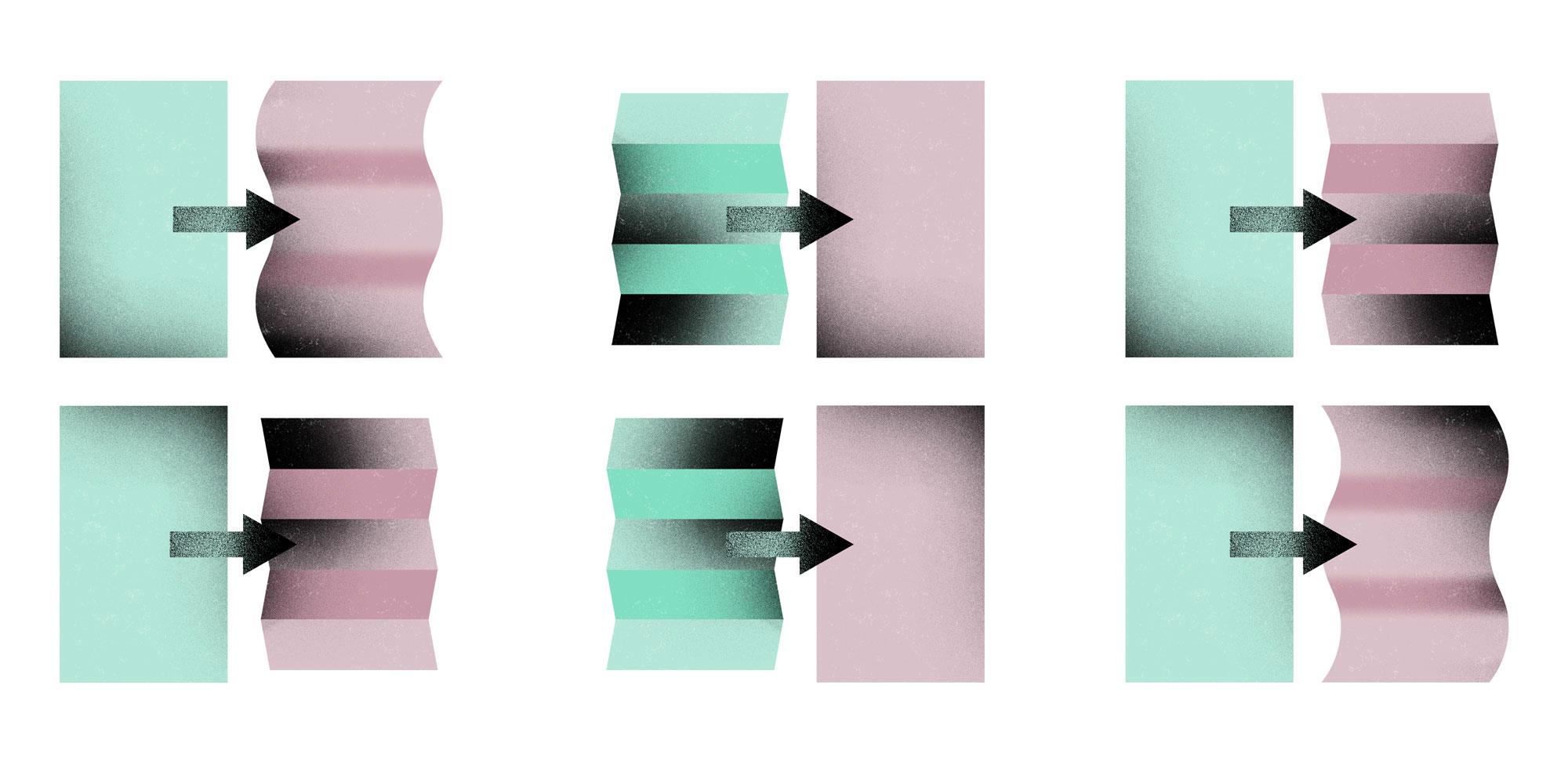

AI machines can best make decisions like what is and is not fake news when you define fake and real news — you do so by showing the machine tens of thousands of examples of each.

For that, you will need a data set of high quality journalism, as well as another collection with a sample of fake news, which could be sourced from a predetermined list of fake news.

The AI journalist

Remember, algorithms are written by humans, and humans make errors. Therefore, our AI machine may well make an error, especially in its early stages.

It’s the modern journalist’s job to know what her system is doing in order to be confident in what she is publishing.

There’s also an editorial decision to be made here. No system is going to be 100 per cent accurate, so which would you rather tend towards, false positives or false negatives? Would you rather a fake story be labelled as real or would you rather have a real story labelled fake?

Algorithmic errors aside, AI can help detect fake news. But as we mentioned earlier AI can also help disseminate it (all the more reason to understand AI, then!)

A new wave of “Fake-news”?

If you’ve worked in the journalism industry long enough you’ve probably been fooled by a doctored video, photo or sound bite. And every day the technology available to produce those fake news items is becoming easier to use and more publicly accessible. For instance, Adobe recently announced an AI project that is able to replicate the same tone of voice by simply analysing a sample of a speech, while a project developed by Stanford University researchers enables the manipulation of someone’s face in a video in real time.

In other words, the same sorts of machine learning and sub-domains of AI that can be used to fight fake news can also be used by others to propagate new types of misinformation.

The conclusion: Journalists need to understand AI.

Francesco first published this article on LinkedIn. It is reproduced with his permission here.

About Francesco:

Francesco Marconi is responsible for strategy and corporate development at the Associated Press, where he is part of the strategic planning team, identifying partnerships opportunities and guiding media strategy. Francesco complements his professional activity with academic research at Columbia University’s Tow Center for Digital Journalism, where he is an Innovation Fellow.

Francesco studied business and journalism at the University of Missouri and completed his post graduate work as a Chazen Scholar at Columbia Business School’s Media Program. In 2014 he joined Harvard University’s Berkman Center for Internet and Society as an affiliate researcher studying the impact of data in journalism. Francesco started his career at the United Nations researching science and technology solutions for developing countries, resulting in the publishing of his first book and TED talk on Reverse Innovation.

More like this

How data and artificial intelligence are changing publishing

What will Artificial Intelligence mean for journalism?

Lack of trust in media: ‘magazine media could offer a blue print out’

A behaviour expert’s 3 suggestions to cut through echo chambers and win trust